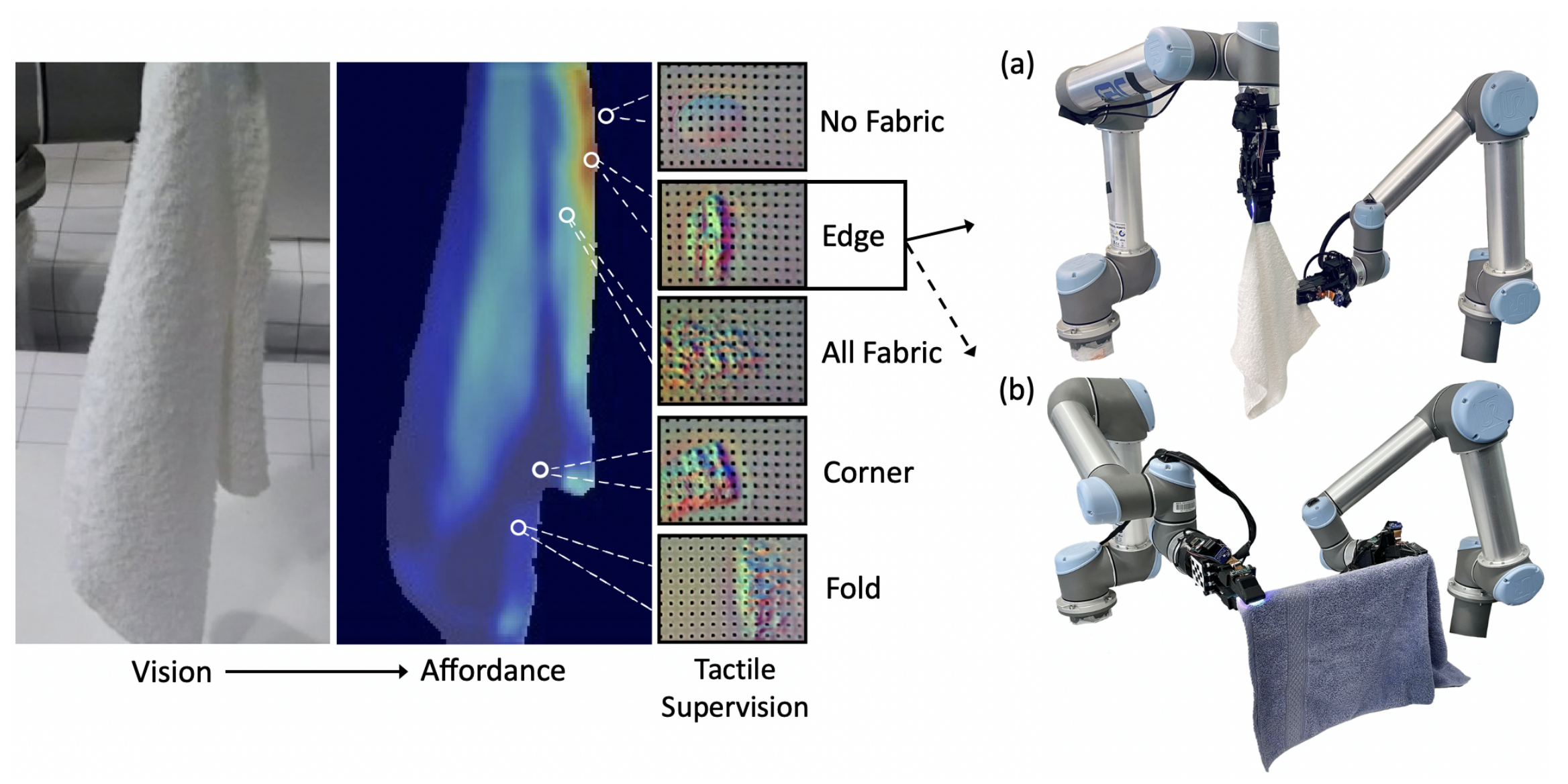

Cloth in the real world is often crumpled, self-occluded, or folded in on itself such that key regions, such as corners, are not directly graspable, making manipulation difficult. We leverage visual and tactile perception to unfold the cloth via grasping and sliding on edges. By doing so, the robot is able to grasp two adjacent corners, enabling subsequent manipulation tasks like folding or hanging. As components of this system, we develop tactile perception networks that classify whether an edge is grasped and estimate the pose of the edge. We use the edge classification network to supervise a visuotactile edge grasp affordance network that can grasp edges with a 90% success rate. Once an edge is grasped, we demonstrate that the robot can slide along the cloth to the adjacent corner in both (a) vertical and (b) horizontal configurations using tactile pose estimation/control in real time.

Supplementary Video

Preprint

Cloth Manipulation

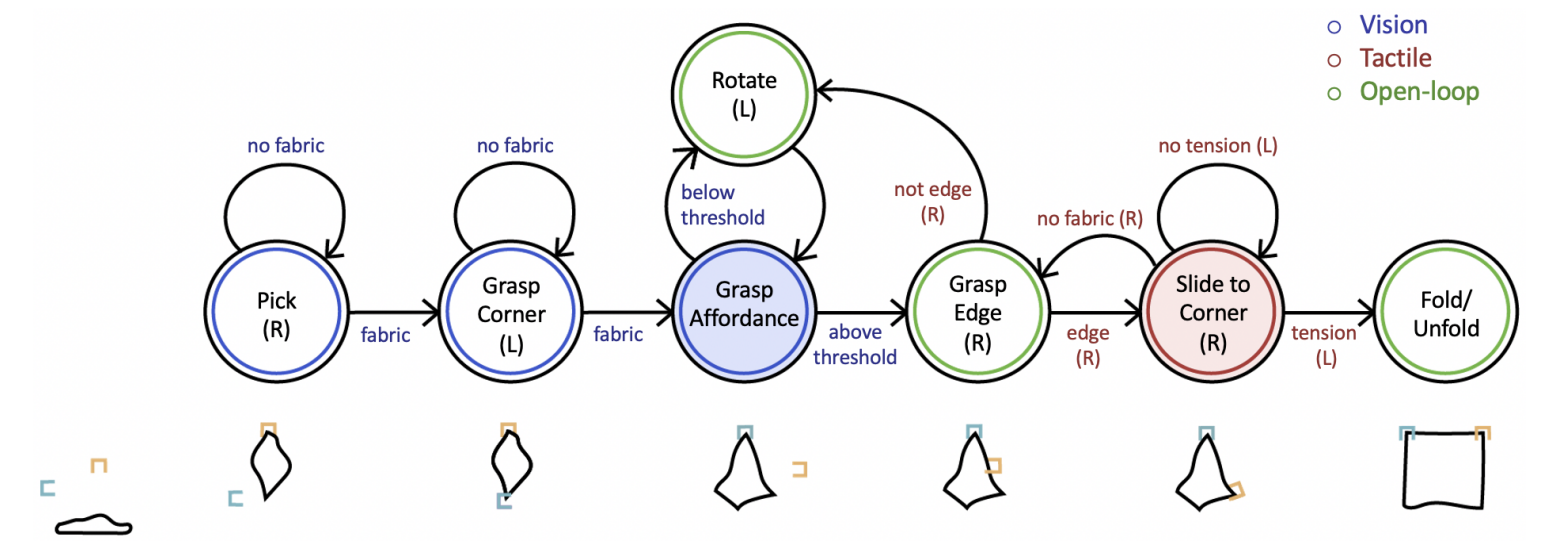

In order to fold or unfold the cloth, we first pick the towel up from a surface, grasp a corner, grasp an edge adjacent to that corner, and slide to the adjacent corner. Once the robot is grasping two corners, it can complete the folding or unfolding task open-loop.State Machine

To enable this behavior, we have three main contributions (described further in below sections):

- Tactile Perception of Local Features. For classification and pose estimation of local features

- Visuotactile Grasp Affordances. A grasp localization affordance network pre-trained in simulation and fine-tuned with tactile supervision

- Tactile Sliding. Tactile based controllers to slide a gripper along the edge of cloth in two different configurations

Tactile Perception

We demonstrate a strategy for local feature pose estimation and classification using tactile perception.Pose Estimation

Grasp Classification

Pose Estimation: The network takes a single depth image and outputs whether it is grasping an edge, no fabric, or all fabric. If grasping an edge, the network also outputs the position of the edge. We use this network in the tactile sliding controller.

Grasp Classification: This network uses multiple tactile depth frames from the grasp sequence in order to classify the grasp as edge, no fabric, all fabric, corner, or fold. Multiple frames help encode temporal information to help distinguish between folds and other categories. The network has an overall classification accuracy of 92%. Since our affordance network only needs to distinguish edges from other grasps for our affordance network, we also report a non-edge classification accuracy of 98%.

Visuotactile Grasp Affordance

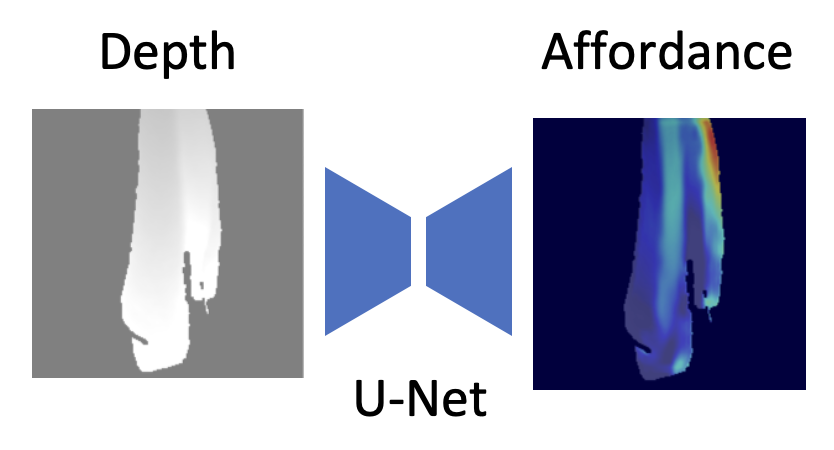

Visual edge detection can be difficult since edges look similar to folds along the contour of the cloth. The cloth edge can also easily twist in on itself, but a successful grasp for sliding requires grasping a single layer with the edge aligned within the gripper to avoid collision. Given these additional considerations, we train a network that learns visuotactile edge grasp affordances (which pixels will result in good edge grasps).

We first train this affordance network in simulation. Then, we fine-tune this network on a robot using the tactile information from the grasp attempt’s success/failure as supervision.

Simulated Dataset

Data Collection on Robot

Simulation: We used Blender to create our simulated dataset. The ground-truth labels of the affordances in simulation are determined for each pixel by whether (1) its an edge, (2) the gripper will collide with cloth, (3) the gripper would grasp a single layer of fabric, and (4) the point is reachable. All of these criteria can be determined in simulation because we have access to the full state of the cloth. We found that learning from simulation provides global structure.

Fine-Tuning: We collected around 3,000 grasps to fine-tune our network on the real robot. We used tactile supervision from our grasp classification network to determine if an edge was successfully grasped.

Tactile Sliding

We implemented tactile sliding in two different configurations: vertical and the more challenging horizontal configuration.

Vertical Sliding

Horizontal Sliding

Vertical Sliding: The sliding gripper moves forward and back to keep the edge close to the target position within the grip until the robot reaches a corner. We used a proportional controller with respect to the fabric edge’s offset from the target position. An increase in shear (as determined by the tactile sensor) indicates that the sliding gripper has reached the thicker corner.

Horizontal Sliding: This configuration is harder because the weight of the towel makes it more likely to slip from the grip. We learned a linear dynamics model that finds the relationship between state (defined by cloth edge position and orientation and robot position) and pulling angle. This is done in a similar fashion to how we implemented cable following. We used this model in an LQR controller that keeps the edge close to the target state as the cloth is pulled through.